This essay is Chapter 13 in Mr. Taylor’s Union At All Costs: From Confederation to Consolidation (2016).

This essay is Chapter 13 in Mr. Taylor’s Union At All Costs: From Confederation to Consolidation (2016).

“I supported President Lincoln.

I believed his war policy would be the only way to save the country,

but I see my mistake. I visited Washington a few weeks ago, and I saw

the corruption of the present administration—and so long as Abraham

Lincoln and his Cabinet are in power, so long will war continue. And for what? For the preservation of the Constitution and the Union? No, but for the sake of politicians and government contractors.”[1] J.P. Morgan—American financier and banker, 1864.

The assertion that Lincoln genuinely attempted to avoid war has been

preached since General Lee’s surrender at Appomattox. The testimony of a

Southern peace representative who spoke with Lincoln on April 4, 1861,

in an effort to avert war provides keen insight into a side of the issue

seldom heard or taught.

[2]

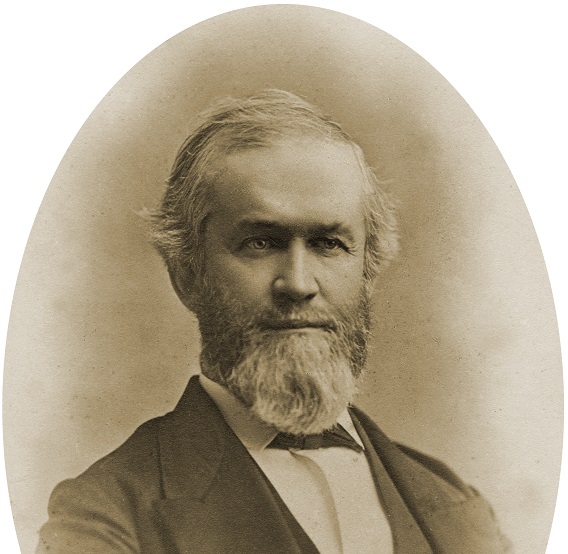

Some historians dismiss the importance of the meeting between Lincoln

and Colonel John Brown Baldwin, but it is beyond dispute the meeting

happened and pivotal issues were seriously discussed. On February 10,

1866, Baldwin testified before the Joint Committee on Reconstruction in

Washington, D.C. His comments appeared in a pamphlet published in 1866

by the

Staunton Speculator and he provided his account to a fellow Confederate in 1865 just prior to the end of the war.

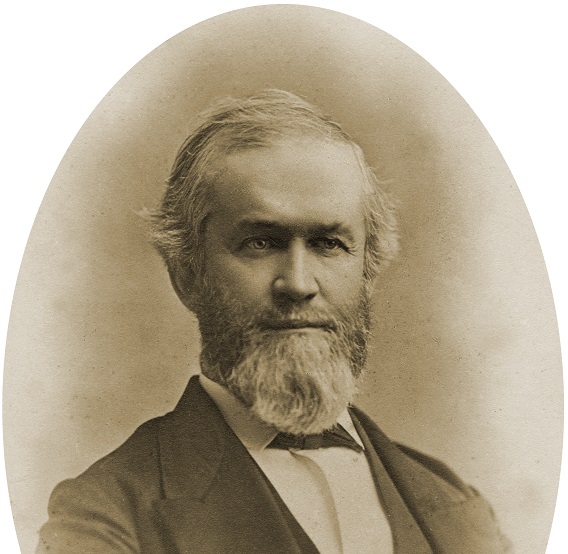

Reverend Robert L. Dabney, Chief of Staff to Stonewall Jackson, met

Baldwin in March of 1865 in Petersburg, Virginia, when the Army of

Northern Virginia was under siege. Baldwin told Dabney, that prior to

hostilities, he had been selected by the Virginia Secession

Convention to surreptitiously meet with Lincoln in April 1861 and

negotiate a peaceful settlement. This meeting occurred at the time the

Virginia legislature was debating the secession issue.

The citizens of the Southern States were well aware of the

disadvantages they faced. The failure of the Peace Congress, rejection

of the Crittenden Amendment, and the clandestine arming of the Federal

government raised concerns in the South that war may be on the horizon.

There was lingering frustration in the South resulting from the

failed compromise effort of A.B. Roman, Martin Crawford, and John

Forsyth. As sectional hostility continued to fester, further attempts at

peace became critical. Most Virginians were strong Unionists, a fact

mirrored in the make up of the anti-secession Virginia Convention.

Considering the situation dire, representatives from Virginia decided to

make another attempt to diffuse the sectional schism.

William Ballard Preston, an anti-slavery defense lawyer and prominent

member of the Virginia Convention, summed up the concerns of Virginians

about the direction of the country:

If our voices and votes are to be exerted farther to hold Virginia in the Union, we must know

(emphasis author) what the nature of the Union is to be. We have valued

Union, but we are also Virginians, and we love the Union only as it is

based upon the Constitution. If the power of the United States is to be

perverted to invade the rights of States and of the people, we would

support the Federal Government no farther. And now that the attitude of

that Government was so ominous of usurpation, we must know whither it is

going, or we can go with it no farther.[3]

Preston was disturbed about threats of coercion through federal

overreach and the possibility of destroying the voluntary relationship

of the compact. His view paralleled that of Robert E. Lee, who refused

to participate in the invasion of the seceded States.

[4]

Seward sent a messenger, Allen B. Magruder, to consult with members

of the Virginia Convention and request that they send a representative

to Washington to confer with the U.S. President. Lincoln’s preference

was G.W. Summers, a pro-Unionist from the western part of Virginia. The

Virginia group included Mr. John Janney, Convention President, Mr. John

S. Preston, Mr. A.H.H. Stuart, and others. Since this mission was of a

discreet nature, the Convention did not send Summers, but instead sent a

lesser-known representative named John Brown Baldwin. Though

Baldwin lacked the notoriety of other potential candidates, he was

imminently qualified and widely respected. Also, as the brother-in-law

of Stuart, he had strong inside support from a key convention member.

Baldwin’s credentials included graduation from Staunton Academy and the

University of Virginia combined with a reputation as a capable lawyer

and man of integrity. He was also one of Virginia’s strongest Unionists.

Though somewhat reluctant, Baldwin realized the magnitude of this

mission and dutifully accepted the role as Virginia representative.

Dabney summarized Baldwin’s instructions:

Mr. Magruder stated that he was authorized by Mr.

Seward to say that Fort Sumter would be evacuated on the Friday of the

ensuing week, and that the Pawnee would sail on the following Monday for

Charleston, to effect the evacuation. Mr. Seward said that secrecy was

all important, and while it was extremely desirable that one of them

should see Mr. Lincoln, it was equally important that the public should

know nothing of the interview.[5]

Baldwin and Magruder prepared for their trip to Washington, choosing

to travel the Acquia Creek Route. On April 4, Baldwin rode with

Magruder, in a carriage with raised glasses (for maximum secrecy), to

meet Seward. Seward took Baldwin to the White House, arriving slightly

after 9:00 A.M. The porter immediately admitted him, and, along with

Seward, led Baldwin to “what he (Baldwin) presumed was the President’s

ordinary business room, where he (Baldwin) found him in evidently

anxious consultation with three or four elderly men, who appeared to

wear importance in their aspect.”

[6]

Though these gentlemen appeared to be very influential, it does not

appear Baldwin knew them, as he did not identify them when he recounted

the meeting.

Seward informed Lincoln of his guest’s arrival, whereupon, Lincoln

immediately excused himself from the meeting, took Baldwin upstairs to a

bedroom and formally greeted his visitor: “Well, I suppose this is

Colonel Baldwin of Virginia? I have hearn [sic] of you a good deal, and

am glad to see you. How d’ye, do sir?”

[7]

Baldwin presented his credentials. Lincoln sat on the bed and

occasionally spat on the carpet as he read through them. Once satisfied

with the introduction, Lincoln conveyed that he was aware of the purpose

of the visit.

Lincoln admitted Virginians were good Unionists, but he did not favor

their kind of conditional Unionism. However, he was willing to listen

to Virginian’s proposal for resolution. Baldwin reaffirmed Virginia’s

belief in the Constitution as it was written and expressed Virginia

would not subscribe to a conflict based on the sectional, free-soil

question. He told Lincoln that as much as Virginia opposed his platform,

she would support him as long as he adhered to the Constitution and the

laws of the land. To lessen the acrimony that arose from the election,

Baldwin suggested Lincoln issue a simple proclamation asserting that his

administration would respect the Constitution, the rule of law, and the

rights of the States. This proclamation should include a willingness to

clarify the misunderstandings and motives of each side. Baldwin told

Lincoln that Virginia would assist and stand by him, even to the point

of treating him like her native son, George Washington. Embellishing his

point, Baldwin added, “So sure am I, of this, and of the inevitable

ruin which will be precipitated by the opposite policy, that I would

this day freely consent, if you would let me write those decisive lines,

you might cut off my head, were my own life my own, the hour after you

signed them.”

[8]

He also suggested that Lincoln “call a national convention of the

people of the United States and urge upon them to come together and

settle this thing.”

[9]

Furthermore, Lincoln should make it clear that the seceded States would

not be militarily forced to return to the Union, but rather a course of

compromise and conciliation would be pursued to bring them back in.

According to Baldwin, with a simple agreement to this proposition,

Virginia would use all possible influence to keep the Border States in

the Union and convince the already seceded seven States to rejoin.

Baldwin made it clear that Virginia would never support unconstitutional

attempts to coerce the seceded States against the will of the people of

those States.

The fate and direction of the Constitutional Union sat squarely on

Lincoln’s shoulders; he had the power to diffuse the situation.

Baldwin did everything he could to convince Lincoln the

secession movement could be put down, stressing that Virginia was eager

and willing to help.

During the conversation, it became obvious to Baldwin that the issue

of slavery was not Lincoln’s primary concern. Digesting Lincoln’s

comments, Baldwin began to see the issue as “the attempted overthrow of

the Constitution and liberty, by the usurpation of a power to crush

states. The question of free-soil had no such importance in the eyes of

the people of the border States, nor even of the seceded States, as to

become at once a casus belli.”

[10]

Lincoln did not like what he heard. He painted the South as

insincere, as people with hollow words backed by no action, and claimed

the resolutions, speeches, and declarations from Southerners “a game of

brag”

[11] meant to intimidate the Federal administration.

Baldwin told Lincoln repeatedly that Virginia would not fight over

the free-soil issue. As a basic point of fact, only about six percent of

Southerners were slave owners, affecting perhaps twenty-five to thirty

percent of Southern families. Fighting over slavery made little sense,

especially given the fact slavery was already constitutionally legal.

However, Baldwin emphasized that coercion would undoubtedly lead to

further separation and likely war.

Baldwin probed for the primary sticking point, leading Lincoln to

ask, “Well…what about the revenue? What would I do about the collection

of duties.”

[12] In response, Baldwin asked how much import revenue would be lost per year. Lincoln responded “fifty or sixty millions.”

[13]

Baldwin answered by saying a total of two hundred and fifty million

dollars in lost revenue (based on an assumed four-year presidential

term) would be trivial compared to the cost of war and Virginia’s plan

was all that was necessary to solve the issue. Lincoln also briefly

mentioned concern about the troops at Fort Sumter being properly fed.

Baldwin responded that the people of Charleston were feeding them and

would continue to do so as long as a resolution was in sight.

Though Lincoln appeared to be genuinely touched by Baldwin’s plea for

peace, he was alarmed at the prospect of lost revenue; he did not like

the idea of the Southern States remaining out of the Union until a

compromise could be reached. His reply underscored this deep concern:

“And open Charleston, etc., as ports of entry, with their ten per cent

tariff. What, then, would become of my tariff?”

[14]

Though it was Fort Sumter in Charleston Harbor where things came to a

head, lower duties would have applied and attracted trade to all

Southern ports, e.g., Richmond, Savannah, Wilmington, New Orleans,

Mobile, Galveston, etc.

Lincoln’s reply to Baldwin made it clear slavery was not the central

issue. He did not mention slavery but voiced alarm at the amount of

revenue that would be lost if he allowed the Confederate States to exist

as a separate country. Import duties comprised the vast majority of

government revenue at that time.

Baldwin asked Lincoln if he trusted him as an honest representative

of the sentiment of Virginia and received an affirmative response. After

confirming Lincoln’s confidence in him, Baldwin stated, “I tell you,

before God and man, that if there is a gun fired at Sumter this thing is

gone.”

[15]

He stressed that action should be taken as soon as possible, stating

that if the situation festered two more weeks, it would likely be too

late.

Lincoln awkwardly paced about in obvious dismay and exclaimed: “I

ought to have known this sooner! You are too late, sir, too late! Why

did you not come here four days ago, and tell me all this?”

[16]

Another fact not revealed in the conversation by Lincoln was that he

had already authorized reinforcement of Forts Sumter and Pickens on

March 29 and the ships were preparing to sail.

Baldwin replied: “Why, Mr. President, you did not ask our advice.

Besides, as soon as we received permission to tender it, I came by the

first train, as fast as steam could bring me.”

[17]

Once more, Lincoln responded: “Yes, but you are too late, I tell you,

too late!”

[18] Perhaps this was the point when it sunk in as to how serious the Southern States viewed the situation.

Lincoln claimed secession was unconstitutional, though it had been

taught at West Point using Rawles’ textbook, that the Union is a

voluntary coalition of States and secession was up to the people of the

respective States. Conversely, Lincoln saw nothing wrong with coercion,

which was historically considered unconstitutional in both North and

South. He felt secession automatically signaled war, when it should have

signified the opposite. Concerning the Constitution, “if followed,

civil war—the fight for control over the government—is impossible.”

[19]

Lincoln made no promises and dismissed Baldwin. Later the same day,

Baldwin engaged in a lengthy conversation with Seward. From their

conversation, Baldwin surmised that Seward preferred and desired to work

toward peace but felt conflict was very likely. Baldwin had fulfilled

his duty and returned to Virginia with the verdict. Dabney later

speculated from Baldwin’s testimony that Lincoln had succumbed to the

pro-war fanaticism of Stevens and abandoned the more sensible warnings

from Seward about the unconstitutionality of coercion.

Stuart confirmed the accuracy of Baldwin’s account to Dabney. Indeed,

Stuart, along with William B. Preston and George W. Randolph, spoke

with Lincoln on April 12, 1861, and received virtually the same message

as Baldwin. “I remember,” says Mr. Stuart, “that he used this homely

expression: ‘If I do that, what will become of my revenue? I might as

well shut up housekeeping at once.’”

[20]

Highlighting Stuart’s meeting was Lincoln’s insinuation that he was

not interested in war; however, the day after their meeting the very

train on which they returned to Richmond carried the proclamation

calling for 75,000 troops to coerce the seceded States.

Another attempt at compromise was detailed in the April 23, 1861, edition of the

Baltimore Exchange and reprinted in the May 8, 1861, edition of the

Memphis Daily Avalanche.

This involved a meeting between a group led by Dr. Richard Fuller, a

preacher from the Seventh Baptist Church in Baltimore, and Lincoln.

Fuller was a South Carolina native and Southern supporter. The article

states:

We learned that a delegation from five of the Young Men’s Christian

Associations of Baltimore, consisting of six members each, yesterday

(April 22, 1861) proceeded to Washington for an interview with the

President, the purpose being to intercede with him in behalf a peaceful

policy, and to entreat him not to pass troops through Baltimore or

Maryland.

[21]

Fuller acted as the chairman and conducted the interview. After

Fuller’s plea for peace and recognition of the rights of the Southern

States, Lincoln responded, “But what am I to do?…what shall become of

the revenue? I shall have no government? No resources?”

[22]

Former U.S. President John Tyler was intimately knowledgeable of the

situation, and he worked diligently to avoid war. With the benefit of

Tyler’s insight, Lyon Gardiner Tyler’s account echoes those of the

Virginia and Maryland representatives:

…the deciding factor with him (Lincoln) was the

tariff question. In three separate interviews, he asked what would

become of his revenue if he allowed the government at Montgomery to go

on with their ten percent tariff… Final action was taken when nine

governors of high tariff states waited upon Lincoln and offered him men

and supplies.[23]

Lyon Tyler, as President Tyler’s son, almost certainly had inside

information about the three aforementioned meetings with Lincoln,

especially in consideration of his father’s tireless attempts to achieve

a peaceful resolution.

Dabney summed up the circumstances surrounding the war by identifying

Lincoln’s reference to the sectional tariff as the tipping point. “His

single objection, both to the wise advice of Colonel Baldwin and Mr.

Stuart, was: ‘Then what would become of my tariffs?’”

[24]

Lincoln saw a free trade policy in the South as an economic threat to

the North that could not be allowed to stand. Through Colonel Baldwin,

Virginia provided a viable option to avoid war and preserve the Union.

Referencing Lincoln’s course of action, Dabney lamented, “he preferred

to destroy the Union and preserve his [redistributive] tariffs. The war

was conceived in duplicity, and brought forth in iniquity.”

[25]

Notes

[1] Mildred Lewis Rutherford,

A True Estimate of Abraham Lincoln & Vindication of the South

(Wiggins, Mississippi: Crown Rights Book Company, 1997.), 58-59. This

quote appeared on page 11 of the December 25, 1922, edition of

Barron’s. Original source:

New Haven Register; copied in

New York World, September 15, 1864.

[2]

Dr. Grady McWhiney, former Professor at the University of Alabama,

Texas Christian, etc. said: “What passes as standard American history is

really Yankee history written by New Englanders or their puppets to

glorify Yankee heroes and ideals.” (From

The Unforgiven, 11).

[3] Robert L. Dabney, D.D.,

The Origin & Real Cause of the War, A Memoir of a Narrative Received of Colonel John B. Baldwin, Reprinted from Discussions, Volume IV, 2-3.

[4] Lee referenced his West Point teaching from Rawles’ 1825 textbook,

A View of the Constitution of the United States of America,

that the Union is a voluntary coalition and States have a legal right

to secede. Lee was duty-bound to fight for Virginia; he understood the

meaning of Article III, Section 3. Virginia’s Alexander R. Boteler,

while serving in the U.S. House of Representatives, warned the Lincoln

Administration that Virginia would secede if there was a call to invade

the Southern States.

[5] Dabney, 3.

[6] Ibid., 4.

[7] Ibid.

[8] Ibid., 8.

[9] “Interview Between President Lincoln and Col. John B. Baldwin, April 4

th, 1861, Statements and Evidence,”

Staunton Speculator (Staunton, Virginia: Spectator Job Office, D.E. Strasburg, Printer, 1866), 12,

https://ia800301.us.archive.org/5/items/interviewbetween00bald/interviewbetween00bald.pdf, (Accessed April 21, 2016).

[10] Dabney, 7.

[11] Ibid., 6.

[12] “Interview Between President Lincoln and Col. John B. Baldwin, April 4

th, 1861, Statements and Evidence,” 12-13, (Accessed April 21, 2016).

[13] Ibid., 13.

[14] Dabney, 8.

[15] “Interview Between President Lincoln and Col. John B. Baldwin, April 4

th, 1861, Statements and Evidence,” 13, (Accessed April 21, 2016).

[16] Dabney, 6.

[17] Ibid.

[18] Ibid.

[19] From a May 2013 conversation with John P. Sophocleus, Auburn University Economics Instructor.

[20] Dabney, 11.

[21] Bruce Gourley, “Baptists and the American Civil War: April 23, 1861,”

In Their Own Words, April 23, 2011,

http://www.civilwarbaptists.com/thisdayinhistory/1861-april-23/, (As reprinted in the

Memphis Daily Avalanche, May 8, 1861, p. 1, col. 4), (Accessed April 21, 2016).

[22] Ibid.

[23] Lyon Gardiner Tyler,

The Gray Book: A Confederate Catechism, (Wiggins, Mississippi: Crown Rights Book Company—The Liberty Reprint Series, 1997), 5. Originally printed in

Tyler’s Quarterly in Volume 33, October and January issues, 1935.

[24] Dabney, 14.

[25] Ibid.

About John M. Taylor

John M. Taylor, from Alexander City, Alabama, worked for over

thirty years at Russell Corporation (subsequently Fruit of the Loom),

primarily in transportation and logistics. In his second career, Taylor

is presently Assistant Director at Adelia M. Russell Library in

Alexander City. He holds a B.S. Degree in Transportation from Auburn

University and has completed nine MLIS Courses at the University of

Alabama. Taylor is married with two sons and two grandchildren.

Inspired by his late Mother, who dearly loved the South and knew one of

his Confederate ancestors, Taylor has been a member of the Sons of

Confederate Veterans since 1989, where he edited both local and State

newsletters; this includes eleven years as Editor of Alabama

Confederate. He has also supported the Ludwig von Miss Institute since

1993.

Taylor’s book,

Union At All Costs: From Confederation to Consolidation (Booklocker Publishing), was first released in January 2017.

Colonel Baldwin Meets Mr. Lincoln